Whalematch

A project designed and developed by Rich Bean, Eric W. Brown, Rhianon Brown, Ted Greene, Isaac Vandor, and subject matter expert Chris Zadra.

Introduction

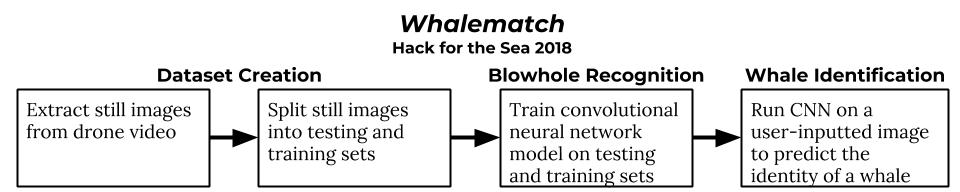

Whalematch is a Hack for the Sea 2018 project designed to detect, identify, and classify whales based on their blowholes.

Using a dataset provided by Ocean Alliance, we built a framework to extract still frames from drone footage shot over a whale, pass those still images into a testing and a training set to create a convolutional neural network model, and then predict the unique identifier of a whale given an input image. The block diagram below outlines the way data is passed through Whalematch to create a successful detection.

Things We Tried that Didn’t Work

We tried two different approaches to the identification and classification problem: one used computer vision techniques while the other used machine learning techniques. Ultimately, the machine learning approach produced more accurate classifications. Future work could include a blending of these approaches, such as using computer vision to pre-process images and improve the machine learning model.

OpenCV

- Haar classification using the full dataset of positive blowholes

- Haar classification using an auto-generated dataset

Machine Learning (Keras)

- A CNN using the SGD optimizer

- A CNN using the Adadelta optimizer

- A CNN using the RMSprop optimizer

For more on the OpenCV approach, see this tutorial on face recognition using Haar classifiers. For more on the machine learning approaches, see this tutorial on face recognition using Keras.

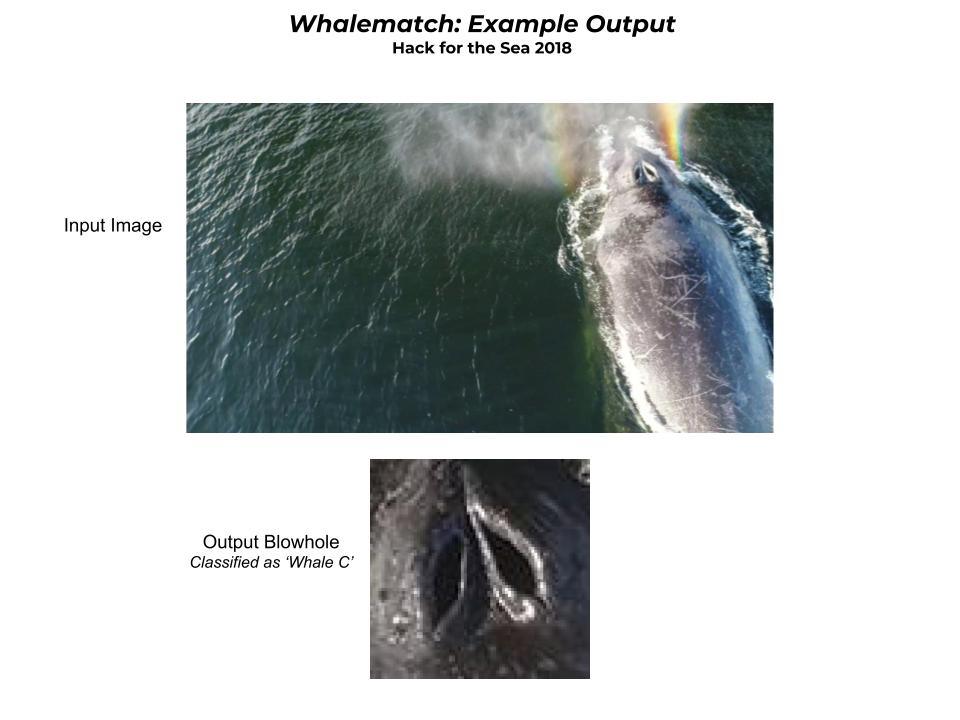

The End Product

Ultimately, the machine learning approach utilizing a convolutional neural net and the Adam optimizer worked best for recognizing and classifying blowholes. Example output from running Whalematch can be seen below.

Future Work and Improvements

A (not even close to complete) list of things to make Whalematch 2.0 better:

- A larger dataset (both in size and variation) of images without a blowhole for training and images with a blowhole for testing

- A dataset mapping whale names to blowholes (for labelling the testing data with more than alphabetical letters)

- Fine-tuning the convolutional neural network model for this task specifically

- An automatic method for generating the dataset

- Investigation into how we can improve results by using blowhole movement time signatures from video streams

Running the code yourself

To run the code yourself, you’ll need to:

- clone this repository -

git clone https://github.com/hackforthesea/whalematch.git - If you don’t have pip installed,

sudo apt-get install python-pip python-dev build-essential - cd into the whalematch folder where you cloned the whalematch repository

- run

pip install -r requirements.txt - run

python prediction.pywith a command-line arg for your filename input image - Example command to run classification:

python prediction.py -i 'whale589.jpg'

Optional: Running with Docker (requires Docker > 0.5.3)

- clone this repository -

git clone https://github.com/hackforthesea/whalematch.git - Build the docker comtainer -

docker build -t whalematch:dev . - Run the built container

docker run -it --rm -v `pwd`:/usr/src/app --name whalematch whalematch:dev bash

- From the new bash shell within the container, run

python prediction.py -i 'whale589.jpg'

Troubleshooting

If you have problems with installation or running the code, start here. Otherwise, contact one of the developers above or Isaac Vandor.